Partner: IFAT, IFAG, RUB, FHR, UNI-KLU, TUG, BHL, IFI, IUNET

To merely show that a sensor is capable of recording objects of a particular size and at a particular range or to resolve two objects close to each other does not actually require a flying drone. Already available testing facilities from the automotive domain are well suited for such tests. Likewise, reference objects from the automotive domain can be reused for these demonstrations and will be complemented by reference objects from the aviation domain (e.g., drones, birds, electric lines, etc.). The result will be one demonstrator per sensor and per sensor version: Radar (Demonstrator 1.1), LiDAR (Demonstrator 1.2), 3DI (Demonstrator 1.3). These demonstrators are internal to the supply chain and do not feed into other supply chains directly. They are also not intended to actually fly so they may not meet size, weight or power requirements.

Partner: IFAT, IFAG, RUB, FHR, UNI-KLU, TUG, BHL, IFI, IUNET

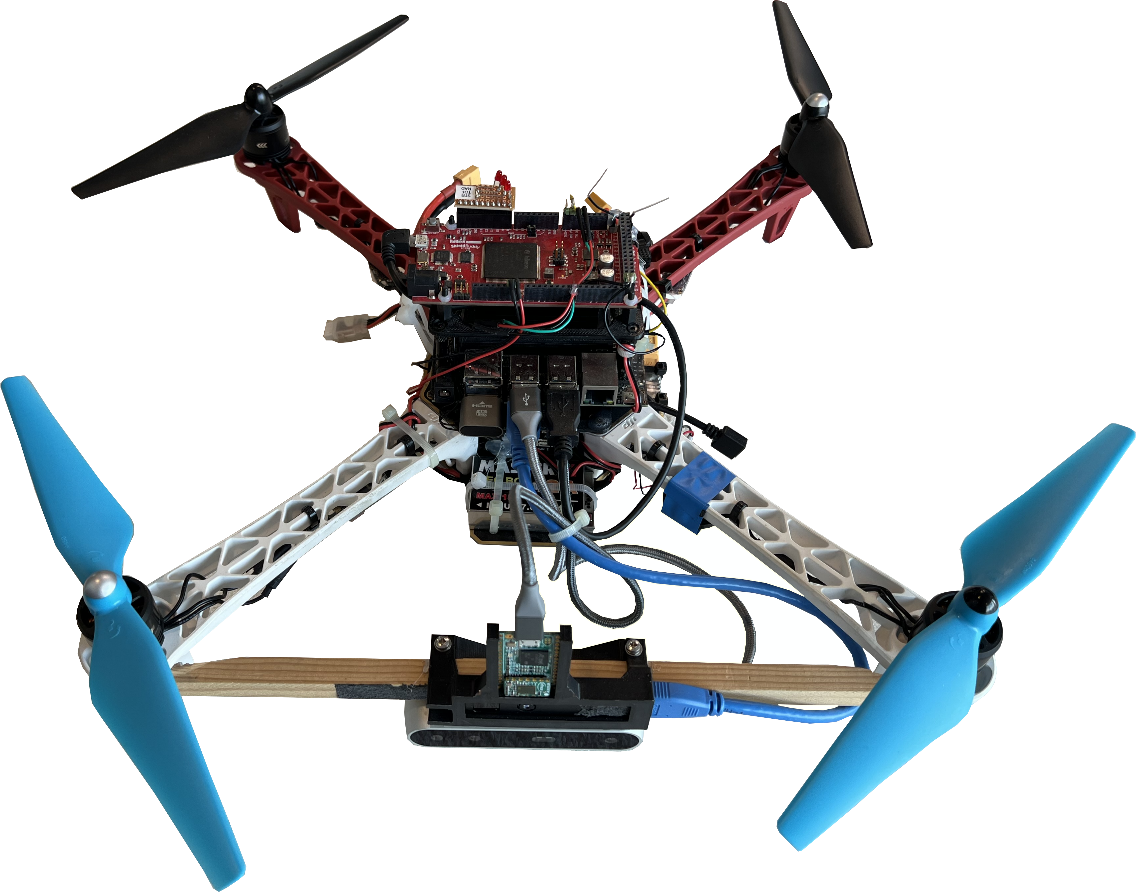

The main goal of Demonstrator 1.4 is to provide a fly-ready drone demonstration that is able to perform some autonomous functionalities during the flight. Thus, the drone should be able to execute a simple point-to-point travel in optimal conditions with planned landing, assuming absence of obstacles on the route and full visibility. Secondly, the demo is aimed at facilitating the acquisition of synchronized multimodal sensor data from different devices and to provide the backbone for the development of the multisensory avionic architecture delivered in Demo 5.1.2. The demonstrator consists into two sub-demonstrations: one targeting outdoor scenarios and one targeting indoor environments. As for the outdoor demonstrator, the partially automated flight features are showcases through HITL simulations involving the Pxihawk4 flight controller and a self-implemented simulation framework developed by IFAG in the context of SC5. The simulation environment supports the acquisition of multimodal synthetic data acquisition in multiple scenarios (forestry, city, open field). The acquisition of real data is carried out through an equipped cycling helmet, where a 3D printed support hosts the sensor setup. In this case, the data collection takes place mostly in the Infineon headquarters located in Munich (Germany), which offers a combination of forest, buildings and open fields areas. The multimodal dataset includes information from Radar, ToF Camera, Stereo Camera, IMU and GPS with RTK (Real Time Kinematics) correction. This data will be recorded following the same format as the ASL EuroMAV. The produced dataset is intended to serve as a benchmark to test the sensor fusion algorithms developed by the partners within SC2. Such algorithms address diverse tasks in the drone autonomous flight including target detection and SLAM (Simultaneous Localization and Mapping). Different static and moving obstacles are introduced in the environments, including trees, people and bikes. In order to better test the robustness of the developed algorithms, effort is put into diversifying the data recording conditions (e.g., type of environment, weather conditions, time of the day, speed). As for the indoor demonstrator, it is based on a DJI-450 Flame Wheel flight-frame. It features a Pixhawk Cube Orange flight controller and a front facing mounting for the environmental sensing module. The module consists of a time-of-flight camera, stereo camera and 60-GHz-radar sensor. The drone system is completed by an Aurix Shieldbuddy TC375 Board and a NVIDIA Jetson Nano to perform the data acquisition and sensor fusion demonstrated in Demo 5.1.2.

Partner: ERI, CEA, INBV, KATAM, ULUND, IFAG

Conventional processor architectures use the load-store concept which results in compute performance being throttled by memory bandwidth limitations. SC2 will develop specialized forward-looking computing architectures which target the requirements of drones through the co-location of compute and memory, thus realizing efficient acceleration for perception and decision-making. This demonstrator will explore the performance efficiency advantages in a lab-environment, using synthetic traces and limited sensor data streams, within the requirements/specification envelope of selected use-cases.

Partner: ERI, CEA, INBV, KATAM, ULUND, IFAG

Interpretation of raw data, gathered from different sensing modalities in reliable and trustworthy manner, is crucial for Beyond Visual Line of Sight (BVLOS) drones. This demonstrator will enable reliable processing architectures for the interpretation of data gathered from different sensing modalities. The demonstrator will be a simulator framework (Multi Agent eXperimenter) to show an integrated data interpretation and communication architecture. Blockchains will be used to store the data in an immutable manner, making it impossible to modify the stored information intentionally or unintentionally.

Partner: ESC, ANYWI, CTG, IFAT, NOKIA, TAU, TUD, UNIPR, VIF, FORD, HUA

After integrating the developed environmental perception sensors form SC1, flight campaigns representing typical BVLOS use cases will be performed using different drone platforms. Those flight campaigns serve as a basis to generate data of all installed environmental sensors to be used for demonstration and performance analysis purposes of the designed environment perception algorithms. An autonomous landing scenario on a visual marker will be executed to evaluate the ego-motion estimation and localization algorithms in combination with the environment perception algorithms. In addition, the wireless video streaming and communication between drones and ground will be evaluated. As a second scenario, the detection of the objects in a smart construction site will be investigated using tethered drones.

Partners: ALM, NOKIA, TAU, HUA, VIF

An environment simulation framework for the development and testing of environment perception algorithms will be designed. This simulation framework will be capable to include dynamic drone models of different drones together with sensor models and will take environmental conditions, like weather and illumination, into account. Furthermore, the simulation framework will be extended to perform video streaming and hardware-in-the-loop tests of individual drone components. In the simulated environment, path planning algorithms that dynamically detect and avoid obstacles will be developed and tested. Recorded stereo camera data from Demo 3.1 will be used to validate the depth estimation by stereo matching that will be developed in this demonstrator.

Partners: NLR, ANYWI, CTG, TUD

Drone flight campaigns were conducted at the NLR Drone Centre for test and demonstration of on-board Detect And Avoid (DAA) systems, including drone encounters. In addition, the transponder interrogator and localisation solution were integrated to form the Cooperative Traffic Sensor (CTS), by NLR and CTG. Based on this set-up the CTS was validated to showcase a secure integration of the UASs in common airspace. The CTS was tested and verified on the ground with a flying intruder equipped with a Mode S transponder, by NLR. Besides this integration scenario, a new DAA method for safe navigation of small-sized drones within an airspace based on received azimuth, elevation, and altitude information was proposed by TU Delft and its performance was validated by simulation experiments. Additionally, the transformation of the situation awareness data from the DAA system into a suitable format in preparation for transmission to ground, other drones, and/or U-space systems was validated by ANYWI.

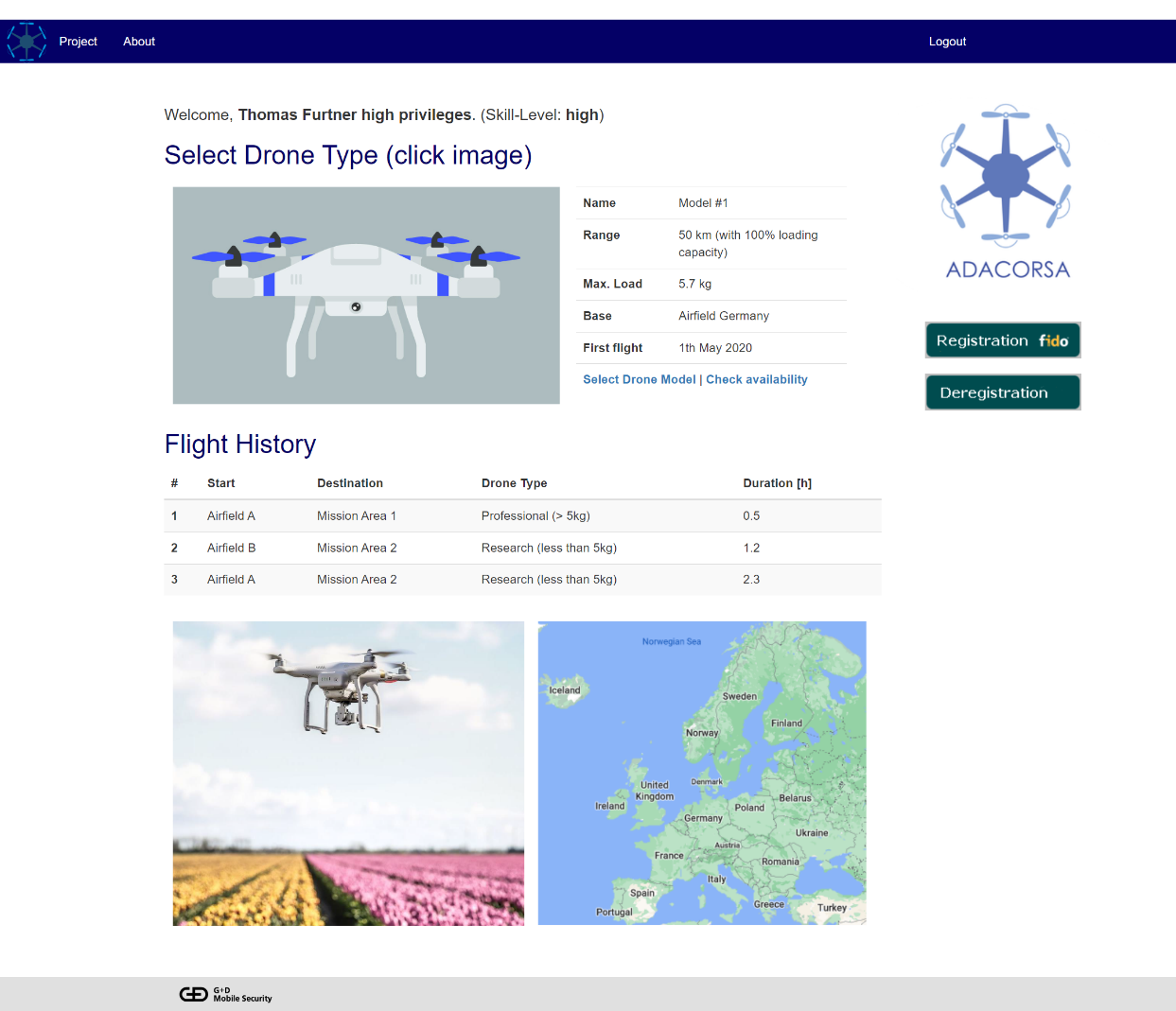

Partners: GD, IFAG, IFAT

To enable long range drone control and especially Beyond Visual Line of Sight (BVLOS) for drones, 5G networks will play an important role. Especially the ultra-reliability and low latency of 5G networks are necessary to enable accurate communication.

For 5G connectivity an enhanced version of the Universal Integrated Circuit Card (UICC) will be used. For the drone use cases highly integrated versions of the UICC - like the embedded (eUICC) - will be used, which then additionally enable remote connectivity management (eSIM). The UICC-platform offers a high level of proven security, which is able to host additional security relevant applications. In the drone use case, this platform can be used to provide a reliable identification of the drone towards the infrastructure based on state-of-the art

cryptographic protocols (required to fulfil regulations). Additionally, the UICC can be used for enhanced application layer security to provide confidentiality, authenticity and integrity for the communication layer (e.g. video transmission). Goal is to securely identify the drone itself and to verify that the operating pilot is in possession of a valid

licence to control the drone. The identification of the operator bases on the user-friendly FIDO-standardization. The standardisation of a Drone Identity Module (ISO/IEC 22460-2) based on a Secure Element such as an eUICC has just started in ISO/IEC JTC 1 / SC 17 / WG12. This group also standardises a Drone Licence (ISO/IEC 22460-3) which can be used to authenticate the license holder

to the drone and prove that she is allowed to pilot the drone. This would allow to identify a drone and the person piloting the drone. WG12 collaborates with the standardisation group ISO/TC 20/SC 16, Unmanned Aircraft Systems.

Furthermore, a second D4.1 sub-demonstrator has been developed, which also is using the eSIM (eUICC) chip, however for another purpose: Securing the “TLS connection establishment” procedure inside of a drone by using the “IoT SAFE” implementation enhancement, running inside of the eSIM chip. This significantly improves today’s drone security by enabling a 2-stage authentication security process: In this concept, 1st the eSIM authenticates to the mobile provider, and 2nd the eSIM + ”IoT SAFE-Applet” perform the authentication in a hardware-protected environment during TLS connection establishment to the cloud/operator. The underlying concept of the developments is depicted in the figure below:

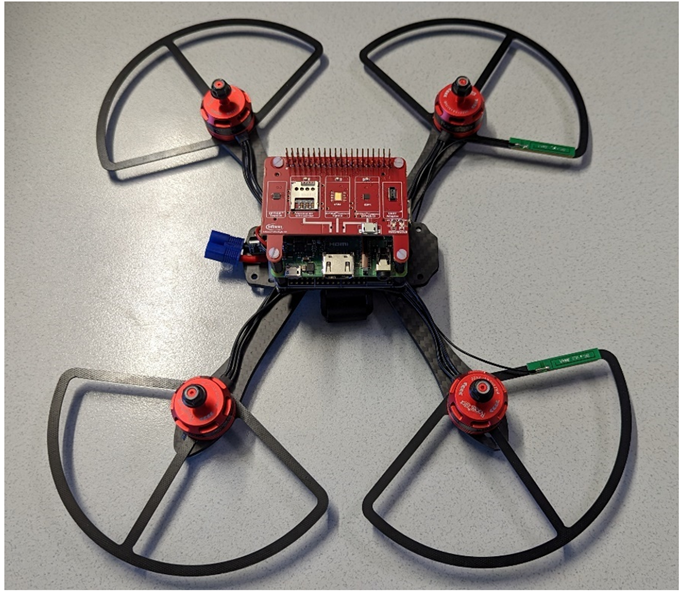

The final resulting D4.1 sub-demonstrator is depicted in the figure below. It is based on the Infineon “Larix” drone-development platform, and enhances its TLS-communication by using the “eSIM Development-Board” (red board) with the “IoT SAFE Applet” installed, mounted on top of the drone’s RaspberryPi (used as drone-controller + communication platform).

Partners: ANYWI, AVU, OTH-AW, BHL, NXP, TECHNO, ISEP, CISC, CEA, UNIPR, IUNET

In addition, developments of beamforming and will further improve the reliability of the cellular network connectivity. This multimodal connectivity for generic message transfer is complemented by technologies for dedicated message transmissions, comprising SubGHz for critical messages with low payload (e.g. GPS position forstatus monitoring) on long distances and BLE, UWB and NFC for short-range messages (e.g. improving positioning at the landing zone or secure identification of the operator). An integrated identification and communication architecture will enable trustworthy cooperation in networks of UAVs, ensuring authenticity, data integrity, and trust. Thereby, blockchains are considered to store the communication in an immutable manner, making it impossible to modify the stored information intentionally or unintentionally, and to manage authorizations in a reliable and secure way through algorithms using the stored data. An authentication system will enable the UAVs to verify the identity of each other and will prevent outsiders to enter the network while a trust management system will evaluate the trustworthiness of insiders. The resulting gateway for multi-protocol communication shall be implemented on a modular drone platform and shall be rigorously tested for use-cases in urban, remote and industrial areas, demonstrating the suitability of the platform for AR, inter-drone data sharing and remote piloting applications.

Partners: TCELL, TB

ue to their mobility UAVs are considered to provide a flexible way to enable 4G and 5G connectivity. This supports various services such as the provisioning of a communication link for emergency tasks in post-disaster scenarios as well as the temporary access in remote areas. Thereby, the UAV is inserted as flying base station (relay node) between a ground-based base station and ground-based UE or other flying BS/UEs, which increases the overall coverage of the signal significantly with comparatively little effort and ensures low latencies between the connected entities. In this demonstrator, the partners will develop this whole setup to provide this flying base station including the investigation on related topics; e.g. designing network architecture with a mobile drone platform as well as the frequency planning.

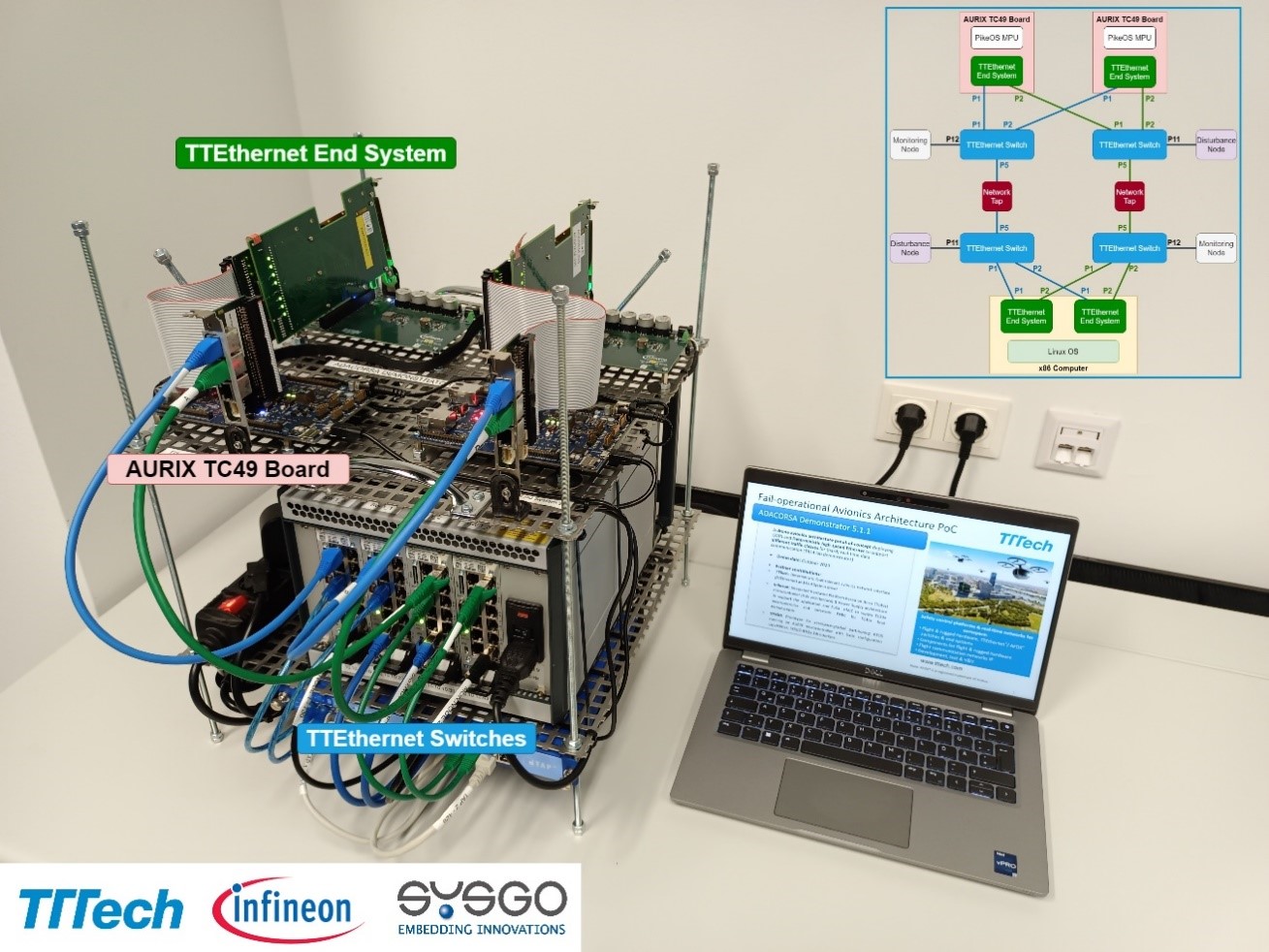

Partners: TTT, IFAG. IFAT, SYSGO, with support from HighTec

The laboratory demonstrator (TRL4) illustrates advanced deterministic networks combining best-in-class solutions from the automotive and aerospace worlds. The demonstrator showcases a novel drone avionics architecture proof-of-concept. Automotive and aerospace-grade components are the main building blocks of this solution. TTTech’s high-speed Deterministic Ethernet backbone is a key element of the demonstrator. It ensures Deterministic Ethernet-based data communication according to a pre-defined schedule with very high reliability, making it suitable for certifiable avionics in aeronautics and space. Infineon’s TÜV-certified automotive AURIX safety microcontroller, extended for multicore processing tasks and equipped with an innovative powered management IC, is deployed to guarantee functional safety up to the highest needs. In addition, the highly security- and safety-certified real-time operating system PikeOS by SYSGO has been integrated to support up to DAL A aerospace applications. The company HighTec supported this demonstrator integration providing a compiler for AURIX.

Partners: IFAG, IFAT

The Demo 5.1.2 consists of two drone systems: One for indoor and one for outdoor environments. The indoor demonstrator includes an environmental sensing module containing a time-of-flight camera, stereo camera and 60-GHz-radar sensor. Data from all three sensors is processed on a NVIDIA Jetson Nano computing board running ROS. The flight-critical algorithm for filtering and ensuring redundancy runs on an Aurix TC375 under a dedicated real-time operating system (PXROS). The data from all three sensors is fused to detect and classify objects in the drone’s path. Current activities include a series of experiments necessary for the validation and verification of the demonstrator setup and flight tests with obstacles to be avoided. The outdoor demonstrator extends the environmental sensor setup of the indoor demonstration (Stereo Camera, ToF camera, RADAR) with additional sensors enabling outdoor navigation (LiDAR, IMU, RTK GPS). Furthermore, it includes a COTS flight controller (Pixhawk4) and three embedded computing platforms (NVIDIA Jetson Nano, FPGA and Aurix TC375). The functionality of the architecture is showcased in multiple use case scenarios including data gathering, object detection and avoidance and Simultaneous Localization and Mapping (SLAM). The results will be showcased as a lab demonstration through HITL/SITL simulations with the help of a customized AirSim-based flight simulator that includes a model of the 60Ghz RADAR. Current activities include the validation of a HITL detect-and-avoid demonstration involving the NVIDIA Jetson Nano, the Aurix microcontroller and the flight controller.

Partners: NXP, TECHNO, NLR, ANYWI

Demonstration of Modular Base drone system highlighting critical differentiators on sub-system and system-level in the area of quality of service, security/authentication of subsystems and communication, and safety and mixed-criticality- concepts in the architecture. Industrial grade UAS, based on automotive grade

CPUs including the following features:

- Secure element

- NFC, e.g. for distribution of certificates

- CAN bus and Automotive Ethernet, switch

- Companion computer vision (modular, optional)

- Authentication between components.

The aim of the demonstrator is to prototype an industrial grade UAS based on a modular setup and maximally based on automotive components.

Partners: ISEP, EMBRT

In this demonstrator, we will validate the “Point-to-Point” (P2P) communication architecture in a HiL (hardware in the loop) simulation environment. This will enable the validation and demonstration of the architecture in representative operational scenarios, defined by EMBRT, concerning a fail-safe operation of flight control and navigation systems.

Partners: ESC

This use case demonstrates a capability of a multi-sensor multi-constellation PNT solution for precise drone navigation with a possibility to communicate the location of a drone during the whole flight, including areas with no LTE coverage. The functionality of communication unit delivering RTCM correction data for the onboard PNT during the whole flight will also be demonstrated. The communication unit selects the active communication channel based on the actual and predicted signal strength data. Demonstrated system will utilize two independent PNT solutions which will closely communicate with each other and will ensure the system receives resilient and accurate positioning information even in case of HW or SW failure.

Partners: FRQ

This demonstrator will create a Registration, Identification, Tracking & Payload Sensor Data Processing Infrastructure. The proposed pre-operational Flight Information Management System (FIMS) comprises an interoperability architecture for integrating existing commercial off the shelf (COTS) UTM components as well as other infrastructures to perform demonstration activities based on operational scenarios and concepts of operations with respect to registration, identification, tracking and payload sensor data processing. The demonstrator will show the fitness for purpose of combining COTS components to demonstrate all phases of drone operations with focus on pre-flight and flight execution. The concept translates the workflow into services and interactions with external parties and other infrastructures enabling shared situational awareness for all aviation stakeholders. A proposed microservice-oriented data exchange layer provides standard protocols to connect various UTM services allowing for automated drone traffic management and situational awareness. The proposed architecture underlines that the proposed data exchange layer might be subject to a centralised regime owned by the ANSPs (in accordance with current ICAO rules and regulations). Ultimately, the whole concept shall comply and integrate with existing ICAO regulations (ATM/UTM integration) by simultaneously providing a service-oriented infrastructure to enable new business models for the UTM service provider landscape.

Partners: UBW, SYR, ALM

In order to facilitate routine autonomous UAV operations that are not only safe but also certifiable and trusted by the public, it is critical that devices and functionality to guarantee safety are easy to explain and understand. We here provide a purely algorithmic "safety layer" that works on top of any available on-board functionality. The layer exchanges data regarding motion intention, dynamic capabilities and possible safety issues, with other vehicles and with ground-based traffic management systems, continuously verifies vehicle safety, and takes over control only to enforce very basic safety maneuvers in case of emergency or failure of critical on-board capabilities. This way, safety of the vehicle is guaranteed independently of the functioning, performance, or even the brand of many complex on-board systems such as communication units and motion planners. Instead of certifying all those systems, it suffices to certify the safety layer. Moreover, the top-level functionality of a safety layer is easily explained and demonstrated to both lawmakers and authorities. The development of the safety layer includes the design of diagnosis algorithms to verify vehicle capabilities and technical safety in real time, and of a distributed conflict detection and resolution strategy. Functionality of conflict resolution will be supported by fast and accurate motion prediction procedures, and will take advantage of hardware modules from the industrial grade UAS system developed in SC5. Vehicle capabilities will be described and shared in the form of simplified dynamic models with guaranteed uncertainty bounds, and the safety layer will also rely on a small set of basic safety manoeuvres in the form of low-level motion controllers. UBW will create and provide a UAV lab that permits experimental tests of scenarios with high airspace density. The goal is to experimentally demonstrate safe autonomous operation of several independent UAVs in densely populated airspace, under laboratory conditions. Failure of on-board components such as detection, communication and planning systems will certainly degrade performance, but must not impair safety due to a functioning safety layer. Dependence of performance degradation on particular failures and on airspace density will also be evaluated. Hazard analysis and risk assessments on particular identified failure modes will be performed to address regulatory requirements to support multi-UAV scenarios.

Partners: BHL, ALM

This demonstrator has the goal of setting up a ROS-based UTM traffic Simulation Environment, to model and simulate selected UTM traffic scenarios, parametrized with drone sensor performance characteristics, drone on-board intelligence functionality, and link performance characteristics. Advanced drones integrate functionalities such as digital telecommunications, sensing and control capabilities as well as on-board computing, and thus represent Cyber-Physical Systems (CPS). By itself, shortcomings of individual technologies – for example for sense-and-avoid – are insufficient for safe flight operations. Considering the technology convergence, e.g., of telecommunications, databases and predictive capabilities, these shortcomings may be overcome, regarding advancements in availability and increased performance in different technology domains. Considering the UTM system as a Cyber-Physical System of Systems (CPSoS), an analysis of the interplay between sensing, telecommunication, and on- and off-board computing may thus provide an important

Partners: NLR

One of the main use cases of the work performed in the ADACORSA project is the ability to perform Beyond Visual Line of Sight (BVLOS) flight operations by using Detect and Avoid (DAA) system solutions developed in this project. Using the NLR Detect and Avoid flying testbed, selected components developed in SC3 and SC5 will be integrated into the flying testbed to perform a verification test flight campaign. The requirements and flight test cards will be drafted in close cooperation with the development partners of the selected components (NXP and TTTech). The operational scenarios will be chosen taking into account the different available U-space concept of operations (ConOps) results as produced by the SESAR CORUS project and other entities working on these drone ConOps. In preparation of the flight test campaign a Specific Operational Risk Analysis (SORA) will be performed. The goal of the test flight campaign is to: 1. verify the correct functioning of the developed selected components (from SC3 and SC5); 2. identify necessary and/or desired improvements for these components and/or the system (architecture); 3. evaluate functional, technical and certification requirements and identify possible issues; 4. demonstrate safe navigation through airspace with this system while encountering other (manned) aircraft. The flight tests will be performed at the Netherlands RPAS Test Centre (NRTC) operated by NLR at its Marknesse facilities. The activities will be concluded by an end demonstration flight with the tested system for the Beyond Visual Line of Sight (BVLOS) use case substantiation.

Partners: CEA

This demonstrator will create a UTM Blockchain Simulation Environment enabling the modelling and simulation of UTM Blockchain scenarios parametrized with different types and number of drones. To enable trustworthy cooperation (to ensure mainly authenticity, data integrity, and trust) in networks of drones, an integrated identification and communication architecture where blockchains are to store the communication in an immutable manner will be provided. This way, it will be impossible to modify the stored information intentionally or unintentionally, and authorizations will be managed in a reliable and secure way through algorithms using the stored data. The authentication system will enable the drones to verify the identity of each other and will prevent outsiders to enter the network while the trust management system will evaluate the trustworthiness of insiders. However, evaluation of such blockchain based architecture for drones is not trivial and needs to be assisted by simulations. The goal is there to experimentally evaluate UTM blockchain scenarios (trustworthy cooperation: identification, authentication and so on) parametrized with blockchain characteristics, drone characteristics and drone on-board intelligence functionality. The goal is also to verify whether it is impossible to modify the stored information intentionally or unintentionally, authorizations are managed in a reliable and secure way through algorithms using the stored data, and entrance of outsiders the network is prevented.

Partners: FORD, TAI, TCELL, TB, KATAM, ROBONIK, BUYUTECH , AVU, CC

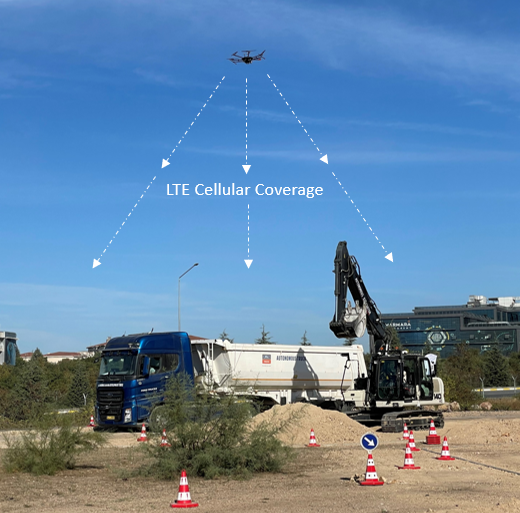

As an important part of Demonstrator 7.3 in terms of reliable communication, the drone platform, equipped with TURKCELL’s LTE relay cell, provided 4.5G connectivity and mobile network service in a construction area, when also providing its sensor data to the occupancy grid for trucks and excavators in parallel.

During this Demonstrator 7.2, the flying base station was stationed above the construction site as seen in the figure below. Throughout the use case, flying base station provided mobile network coverage to enhance the connectivity and service quality in the construction site. In order to ensure sustainable operation during the demo, all equipment was powered by the UAV's battery, by eliminating the need for an extra battery and its weight.

Partners: FORD, TAI, TCELL, TB, KATAM, ROBONIK, BUYUTECH , AVU, CC

Employment of drones in construction sites are limited. Smart construction can be enabled by precise perception, mapping and reliable communication which is provided by emerging drone capabilities. In ADACORSA Demonstrator 7.3, our goal is to prove effectiveness of drone support in construction sites. In this demo, FORD OTOSAN's autonomous Truck and BÜYÜTECH's autonomous Excavator performs loading and unloading operations on a smart construction area, centrally controlled by TÜBİTAK's Ground Control Station using the developed route planning algorithms and inter-vehicle messaging infrastructure.

TÜBİTAK's drone;

(i) reaches the operation area and inform the Ground Control Station about any situation that may jeopardize the safety of the operation through the sensors on it, and

(ii) provides surveillance while the other vehicles operate by providing real-time video to the Ground Control Station. In addition, another drone provides communication with its portable 4G base-station load for construction sites in rural areas where internet infrastructure is unavailable.

In the demo, both vehicles reach the excavation point autonomously and with the supervision of Ground Control Station, Excavator starts autonomously loading soil in its bucket, then unloads it in the middle of the Truck’s trailer body, location of which is tracked and informed by the Ground Control Station. After excavation completes, Ground control Station triggers the Truck that autonomously navigates to the final dumping point where it drains its soil inside the trailer. All traveling, excavation and dumping operations are performed autonomously by the support of drones without any human intervention.

Partners: FORD, TAI, TCELL, TB, KATAM, ROBONIK, BUYUTECH , AVU, CC

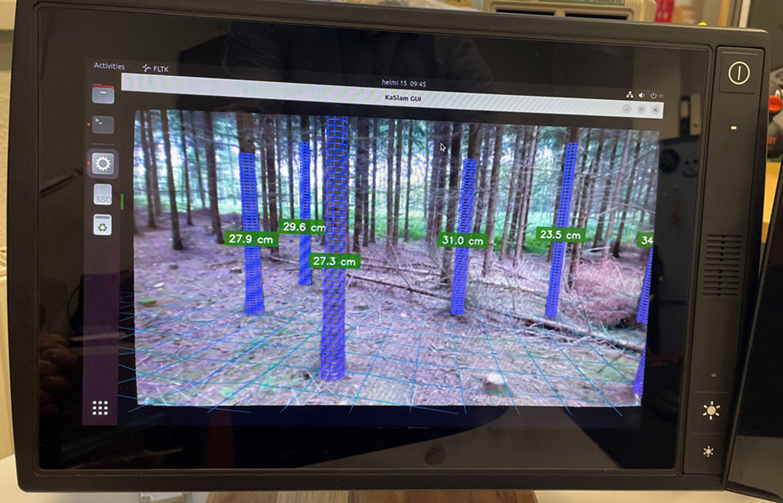

The drone will record all the terrain and trees. Orientation will be completely or partially independent from GPS support due to poor satellite reception under dense tree canopy. In the demo, the drone flies below the tree canopies from position A to position B and makes video recordings of the surrounding environment. Video and sensor data is stored on the device for later post-flight analysis. As an intermediate solution the data acquisition was during the project performed by a hand held drone system.

For post-flight analysis, the HMI edge computer performs AI-based processing of the drone-recorded video feed, and visualizes the terrain and tree diameters on the display, made available to the view of a forestry harvester vehicle operator.

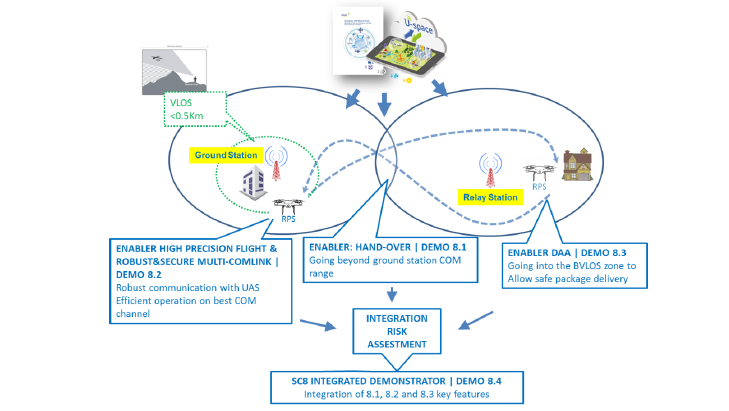

Partners: EMBRT, ISEP, ANYWI

Objectives: (1) demonstrate a safe and secure Authority Control Hand-Over of an UAS, enabling safe and secure flight between ground control stations, for flight deliveries BVLOS. To achieve this, the handover solution will be tested in flight, guaranteeing that the drone flight trajectory must cross the hand-over region within safety margins, enabling a safe and controlled control hand-over.

(2) demonstrate robust C3 link. At any point in its flight, the drone shall switch from its main external communications link to a secondary external communication link whenever QoS is downgraded from a nominal operational level.

The control handover mechanism will rely on a Wireless Safety Layer, capable of supporting multiple connections and a set of wireless defence mechanisms against communication errors, while guaranteeing as much isolation from the communications protocols and systems in use as possible.

Partners: ESC

The objective of this demonstrator is to demonstrate a highly-accurate position determination system and secure and resilient multi-channel communication technology, that is at the same time affordable for drone usage. The use case will demonstrate the utilization of the multi-channel communication, which incorporates bi-directional data transfer leveraging multiple channels including Wi-Fi, 2 LTE channels and SATCOM. The ability to control the drone when connected only to SATCOM channel will also be demonstrated.

Partners: NLR, EMBRT, HUA

This demonstrator focus on the miniaturised component solutions developed in this project in SC3 and SC5 for DAA. It will integrate the components developed in those SCs into the NLR DAA flying testbed. The goal of the demo flight campaign is to demonstrate the developed miniaturised DAA-system for safe navigation in airspace within and out of U-space while encountering other (un)manned aircraft equipped with transponder type tracking devices. The demo scenario, based on SC8 reference for drone logistics. The flight tests will be performed at the Netherlands RPAS Test Centre (NRTC) operated by NLR at its Marknesse facilities and demonstrate separation assurance to enable safe BVLOS flight operations.

Partners: EMBRT,NLRT, ISEP, ANYWI, ESC

To achieve full capability for BVLOS logistics, the different capabilities demonstrated by demo 8.1, 8.2 and 8.3 were integrated. This is by itself a challenge, compounded in ADACORSA by the maturity levels to be achieved by the different functionalities under the project timeframe. This demo will show the modular and interoperability of the different technologies into a different platform (e.g., from the drones used in the dedicated demos to then integrated demo platform) to achieve BLVOS capability.

Partners: ITML, IFAG, IFAT, FORD, TAI, ESC, HUA, HFC, ALTUS

Ramadan, Farah, & Mrad (2017) used the theory of planned behaviour (TPB, Fishbein & Ajzen, 2011) as a framework to investigate perceived risks and perceived functional benefits of drones and their impact on attitudes towards service and delivery drones. According to the theory of planned behaviour (TPB), a user’s behaviour when using (or not using) a new technology is based on behavioural intentions which in turn are based on (1) the attitudes of the individual, (2) the subjective norms, and (3) the perceived behavioural control. Ramadan et al. (2017) adapted the basic idea of TPB to drone acceptance and developed a model of influencing factors.The main factors influencing attitudes towards drones are seen in risk-related factors (privacy risks, safety risks), functional benefits including the drone performance and service quality and relational attributes (drone personification), taking up the idea of subjective norms. Within this SC, ADACORSA will further investigate the applicability of TPB in drone user acceptance aiming at providing evidence that the reliability of ADACORSA achievements meets the requirements of European citizens, so that the European drone industry is supported. In order to achieve that, the main method to be used is surveys; they are to date the most commonly used method to investigate acceptance of drone technologies. Surveys focus on the investigation of commercial and civilian use of drones also relevant for ADACORSA. For instance, in Bajde et al. (2017) people were directly exposed to drone presence in public spaces, their reactions were observed, and they were interviewed thereafter. The last couple of years, a number of surveys have been conducted indicating some of the most critical factors related to user acceptance of drones: environmental friendliness, along with complexity, performance risk, and privacy risk, affect drone delivery adoption (Yoo, Yu, & Jung, 2018), privacy issues is also a critical factor (Khan et al., 2019) etc. The goal of the SC, mainly through demonstrator 9.1, is to validate the main factors that affect the user acceptance of drones through surveys and deliver a thorough, multi-variable predictive model that will facilitate the understanding of what is needed in order to support the European drone industry.

Partners: ITML, IFAG, IFAT, FORD, TAI, ESC, HUA, HFC, ALTUS

The Europe Commercial Drone Market size in 2017 was estimated to be over 27 thousand units and is anticipated to grow at a CAGR of 24% until 2024 with the market value of more than USD 500 million and is anticipated to grow at a CAGR of around 21% over the forecast timespan. By 2020, the European commercial drone market is expected to generate more than 544 million U.S. dollars in revenue. Nevertheless, the fast adoption of new technologies and achievements is always a critical factor related to that growth, and ADACORSA aims at analysing and quantifying that relationship with this SC. In more detail, SC9 will carry out a thorough market analysis for the outcomes of ADACORSA through three complementary workflows (pillars) that will be utilized through Demonstrator 9.2. The first one is the detailed analysis of the technical improvements of ADACORSA with respect to their impact in the EU drone market; the second one is a thorough, updated market analysis of the drone industry in EU; the third one is the design and deliver a roadmap towards the adoption of the ADACORSA achievements from the EU market, in collaboration with the user acceptance analysis carried out in Demonstrator 9.1. The purpose of the work to be carried out and demonstrated in these 3 pillars is to maximize the utilization of the ADACORSA technological advancements in the EU drone industry; to help unlock potential in BVLOS drone operations, to facilitate the exploitation of better sensors via fusion and more reliable communications via integration of data links, to integrate leading AI and data analytics into future air mobility, and ultimately to strengthen the integration of automotive and drone industry.

Partners: SYR, EMBRT, ANYWI

This demonstrator is an overview of the current and future National and European drone regulations and the influence of management systems. The specific drone category as defined by EASA is considered and a regulatory framework report is created. The focus will be on several areas outlined below. Due to its complexity, the special focus of this demonstrator will be on beyond visual line of sight (BVLOS) operations and will play a key role in most of the furture business cases for commercial drone operations. First, the focus will be on the German and European regulations. From 2020 on the European regulations will be implemented in the German regualtion framework. The task here will be to explain how the European regulations are interpreted and how the requirements are embedded in the German framework. In the course of the project the particularities of other European nations will be involved. The task here will be to describe how the European regulations are implemented in other European member states and to outline the main differences between the countries in terms of the interpretation of reuqirements. Furthermore, the influence effects of the regulations on Unmanned Air Traffic Management (UTM, USpace) will be discussed. The task here is to provide an outlook how drone operations will be integrated in the airspace an which technologies are needed. Drone operator organizations will also be part of the demonstrator. The task here is to describe how these organisations need to implement appropriate safety management. Finally, the regulatory framework report is compared with international regulatory frameworks. This demonstrator provides a quick overview of the necessary requirements and regulations for different nations in Europe.

Partners: SYR, EMBRT, ANYWI, HUA

The second demonstrator of this SC is an analysis of the future drone market. This analysis can be divided into environment level, aviation level and technology level. The environment level consists of an environment and scenario analysis. This process includes the following points:

Problem definition: The task is to identify future areas of use cases, to provide problem resolutions which were not possible without use of drones.

Environment analysis: The task here is to find out under which conditions drone based solutions can be implemented from the technical perspective as well from the financial view.

Consistency analysis: The goal of this analysis is to discuss how the available technologies are used and implemented over a wide rage of different use cases.

Scenario frameworks and storyboards: Based on the above analysis various scenario frameworks will be defined and storyboards described.

Implication analysis: The task here is to analyse how drone operations will affect other business and work areas in industry and economy.

Scenario transfer: Key for the use of drone technology is to ensure a well planned transfer into existing work areas and organisations. The task is to give advice how drone technologies can fit into existing systems.

In the aviation level, the market and the stakeholders are considered in detail. In addition to the market analysis and stakeholder analysis, a concept of operations is created. Finally, the topics requirements & constraints, technology analysis & assessment and market potential & risk analysis are dealt within the technology level.

Partners: SYR, EMBRT, ANYWI

This demonstrator is a development of guidelines, checklists and templates. The created documents should facilitate the development of hardware, software and drone systems, as well as the operation of drones. The guidelines should make the planning and execution of regulatory alignment activities easier, the checklists should help to validate and verify hardware, software and UAS and the templates should enable the documentary evidence. The documents of this demonstrator are created for the specific category as defined by EASA. Just like demonstrator 10.1, at first Germany and Europe are considered and then the particularities of other European states are edited.

Acknowledgement

The JU receives support from the European Union’s Horizon 2020 research and innovation programme and Germany, Netherlands, Austria, France, Sweden, Cyprus, Greece, Lithuania, Portugal, Italy, Finland, Turkey.

© 2024 ADACORSA

| Imprint | Privacy Policy | Contact Us |